Unveiling the Inner Workings of Neural Networks: A Deep Dive into Attention Maps in Python

Related Articles: Unveiling the Inner Workings of Neural Networks: A Deep Dive into Attention Maps in Python

Introduction

With enthusiasm, let’s navigate through the intriguing topic related to Unveiling the Inner Workings of Neural Networks: A Deep Dive into Attention Maps in Python. Let’s weave interesting information and offer fresh perspectives to the readers.

Table of Content

- 1 Related Articles: Unveiling the Inner Workings of Neural Networks: A Deep Dive into Attention Maps in Python

- 2 Introduction

- 3 Unveiling the Inner Workings of Neural Networks: A Deep Dive into Attention Maps in Python

- 3.1 Understanding Attention Mechanisms: A Window into Neural Network Decision-Making

- 3.2 Visualizing Attention: The Power of Attention Maps

- 3.3 Implementing Attention Maps in Python: A Practical Guide

- 3.4 Applications of Attention Maps: Unlocking Insights Across Diverse Domains

- 3.5 FAQs: Addressing Common Questions About Attention Maps

- 3.6 Tips for Working with Attention Maps

- 3.7 Conclusion: The Future of Interpretable Deep Learning

- 4 Closure

Unveiling the Inner Workings of Neural Networks: A Deep Dive into Attention Maps in Python

The field of deep learning has witnessed remarkable advancements, particularly in natural language processing (NLP) and computer vision. These advancements are often attributed to the rise of attention mechanisms, which allow neural networks to focus on specific parts of input data, improving accuracy and interpretability. This article delves into the concept of attention maps in Python, exploring their significance, implementation, and applications.

Understanding Attention Mechanisms: A Window into Neural Network Decision-Making

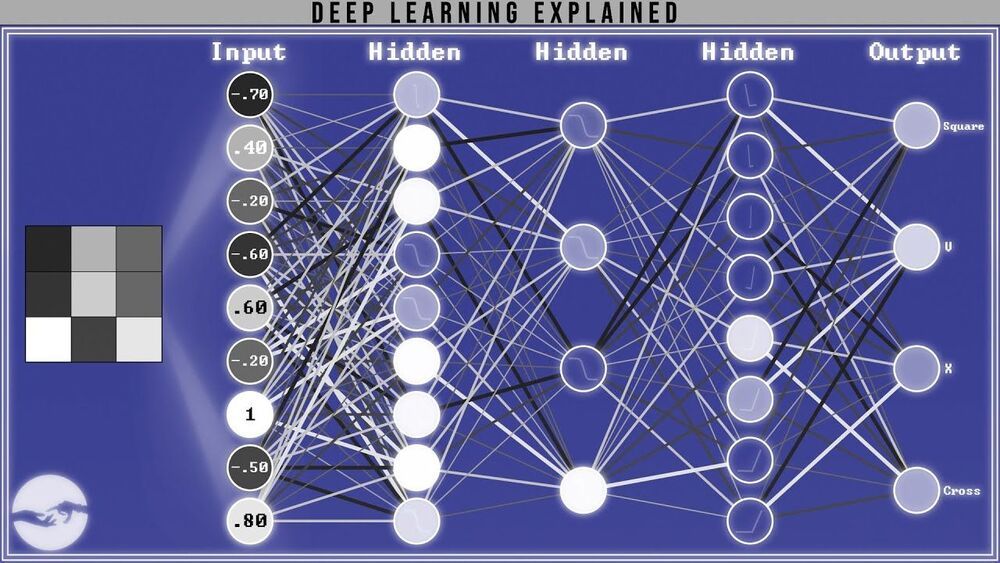

At its core, attention is a mechanism that enables a neural network to prioritize certain parts of its input data while processing information. This selective focus allows the network to concentrate on the most relevant features, leading to more accurate and efficient predictions.

Imagine a human reading a text. Our eyes don’t scan every word with equal intensity; we naturally focus on key phrases and sentences that hold the most meaning. Similarly, attention mechanisms in neural networks allow the model to "pay attention" to specific parts of the input, effectively mimicking this human-like selective focus.

Visualizing Attention: The Power of Attention Maps

Attention maps provide a visual representation of the attention weights assigned by the neural network to different parts of the input data. These maps are essentially heatmaps, where brighter areas indicate higher attention weights, signifying the parts of the input that the model considers most important.

For example, in a machine translation task, an attention map can highlight the words in the source sentence that the model uses to generate a specific word in the target sentence. This visual insight offers valuable information about the model’s decision-making process, aiding in understanding its reasoning and identifying potential biases.

Implementing Attention Maps in Python: A Practical Guide

Implementing attention maps in Python involves a few key steps:

-

Choosing the right architecture: Several attention mechanisms exist, each with its strengths and weaknesses. Popular choices include:

- Self-attention: Allows the model to attend to different parts of the same input sequence.

- Multi-head attention: Combines multiple attention heads, each focusing on different aspects of the input, enriching the model’s understanding.

- Scaled dot-product attention: A common implementation of self-attention, efficient and widely used.

-

Using appropriate libraries: Libraries like PyTorch and TensorFlow provide powerful tools for implementing and visualizing attention maps.

-

Training the model: Training a model with attention requires careful selection of hyperparameters and optimization techniques.

-

Visualizing the attention map: Once the model is trained, you can visualize the attention weights using libraries like Matplotlib or Seaborn.

Applications of Attention Maps: Unlocking Insights Across Diverse Domains

Attention maps find applications in various domains, including:

-

Natural Language Processing:

- Machine Translation: Visualizing attention maps can reveal how the model aligns words in different languages, improving translation accuracy.

- Text Summarization: Attention maps can highlight the most important sentences or phrases in a text, aiding in generating concise summaries.

- Question Answering: Attention maps can pinpoint the specific words or phrases in a text that the model uses to answer a question.

-

Computer Vision:

- Image Captioning: Attention maps can highlight the regions of an image that the model focuses on while generating a caption.

- Object Detection: Attention maps can help identify the key features that the model uses to detect specific objects in an image.

-

Other Applications:

- Time Series Analysis: Attention maps can help identify patterns and dependencies in time series data.

- Recommender Systems: Attention maps can highlight the features that the model uses to recommend specific items to users.

FAQs: Addressing Common Questions About Attention Maps

Q: What is the difference between attention and attention maps?

A: Attention is a mechanism that allows a neural network to focus on specific parts of its input data. Attention maps are visual representations of these attention weights, providing a visual understanding of the model’s decision-making process.

Q: Are attention maps always necessary?

A: While attention maps offer valuable insights, they are not always required. In cases where model interpretability is not a primary concern, attention maps may not be essential.

Q: How can I interpret attention maps?

A: Interpreting attention maps requires understanding the specific task and the model architecture. Highlighted areas indicate the parts of the input that the model considered most important for its prediction.

Q: Can attention maps be used to improve model performance?

A: Attention maps can indirectly contribute to model improvement by providing insights into the model’s decision-making process. These insights can be used to refine the model architecture, training data, or hyperparameters.

Tips for Working with Attention Maps

- Choose the right attention mechanism: Select the attention mechanism that best suits the specific task and data.

- Visualize attention maps effectively: Use appropriate tools and techniques to create clear and informative visualizations.

- Interpret attention maps carefully: Understand the limitations of attention maps and avoid over-interpreting them.

- Use attention maps to improve model understanding: Leverage attention maps to gain insights into the model’s decision-making process and identify areas for improvement.

Conclusion: The Future of Interpretable Deep Learning

Attention maps represent a significant advancement in deep learning, offering a window into the inner workings of neural networks. They provide valuable insights into model behavior, enhancing interpretability and facilitating the development of more robust and reliable AI systems. As deep learning continues to evolve, attention maps will play a crucial role in shaping the future of interpretable AI, enabling us to better understand and trust the decisions made by these powerful systems.

Closure

Thus, we hope this article has provided valuable insights into Unveiling the Inner Workings of Neural Networks: A Deep Dive into Attention Maps in Python. We thank you for taking the time to read this article. See you in our next article!