Mapping Values Between 0 and 1 in Python: A Comprehensive Guide

Related Articles: Mapping Values Between 0 and 1 in Python: A Comprehensive Guide

Introduction

With great pleasure, we will explore the intriguing topic related to Mapping Values Between 0 and 1 in Python: A Comprehensive Guide. Let’s weave interesting information and offer fresh perspectives to the readers.

Table of Content

- 1 Related Articles: Mapping Values Between 0 and 1 in Python: A Comprehensive Guide

- 2 Introduction

- 3 Mapping Values Between 0 and 1 in Python: A Comprehensive Guide

- 3.1 The Significance of Mapping Values Between 0 and 1

- 3.2 Methods for Mapping Values Between 0 and 1 in Python

- 3.3 Choosing the Right Normalization Technique

- 3.4 Applications of Mapping Values Between 0 and 1

- 3.5 FAQs:

- 3.6 Tips:

- 3.7 Conclusion:

- 4 Closure

Mapping Values Between 0 and 1 in Python: A Comprehensive Guide

In the realm of data manipulation and analysis, the ability to normalize values within a specific range, particularly between 0 and 1, proves invaluable. This process, often referred to as scaling or normalization, offers numerous benefits, simplifying data comparison, improving algorithm performance, and enhancing visualization clarity. Python, with its rich libraries and versatile functionalities, provides numerous methods to achieve this normalization. This article delves into the concept of mapping values between 0 and 1 in Python, exploring various techniques, their advantages, and practical applications.

The Significance of Mapping Values Between 0 and 1

Mapping values between 0 and 1, also known as min-max scaling, is a fundamental data preprocessing technique. It transforms data from its original range to a new range between 0 and 1, ensuring all values are represented on a common scale. This standardization offers several advantages:

- Enhanced Comparability: When dealing with datasets containing features with vastly different scales, comparing and analyzing these features directly becomes challenging. Normalization eliminates this disparity, allowing for meaningful comparison across features.

- Improved Algorithm Performance: Many machine learning algorithms, particularly those employing distance-based computations, like K-Nearest Neighbors or Support Vector Machines, perform better with normalized data. This is because algorithms become less sensitive to outliers and features with larger scales, leading to improved accuracy and stability.

- Enhanced Visualization: Visualizing data with widely varying scales can lead to misinterpretations. Normalization ensures that all features contribute equally to the visualization, resulting in clearer representations and easier analysis of trends.

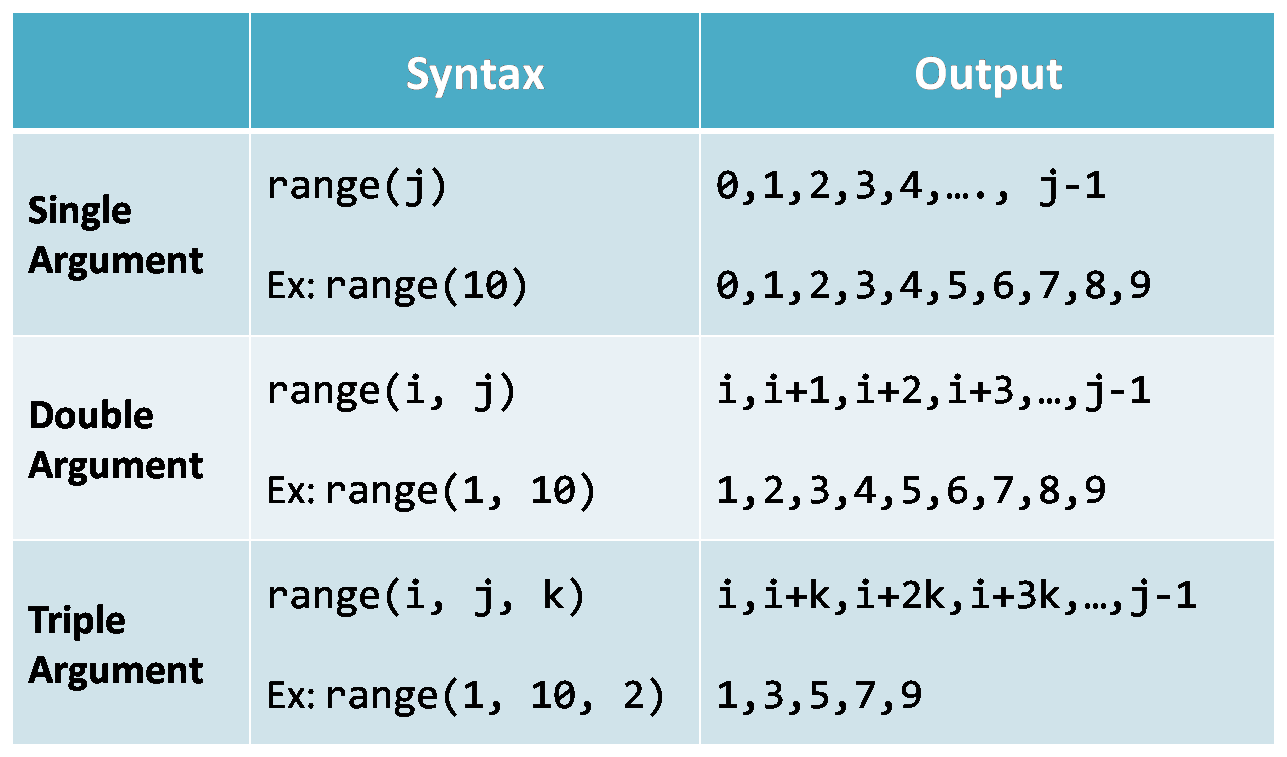

Methods for Mapping Values Between 0 and 1 in Python

Python offers a variety of methods to achieve this normalization, each with its strengths and weaknesses. Here are some of the most commonly employed techniques:

1. Min-Max Scaling:

Min-max scaling, the simplest and most intuitive method, linearly transforms data by mapping the minimum value to 0 and the maximum value to 1. The formula for min-max scaling is:

normalized_value = (value - min_value) / (max_value - min_value)Example:

import numpy as np

data = np.array([10, 20, 30, 40, 50])

min_val = np.min(data)

max_val = np.max(data)

normalized_data = (data - min_val) / (max_val - min_val)

print(normalized_data)Output:

[0. 0.25 0.5 0.75 1. ]Advantages:

- Simplicity and ease of implementation.

- Preserves the original distribution of the data.

Disadvantages:

- Sensitive to outliers, as a single outlier can significantly distort the scale.

- Does not handle negative values effectively.

2. Standardization (Z-score):

Standardization, also known as Z-score normalization, transforms data by centering it around zero and scaling it to unit variance. The formula for standardization is:

normalized_value = (value - mean) / standard_deviationExample:

import numpy as np

data = np.array([10, 20, 30, 40, 50])

mean = np.mean(data)

std = np.std(data)

normalized_data = (data - mean) / std

print(normalized_data)Output:

[-1.26491106 -0.63245553 0. 0.63245553 1.26491106]Advantages:

- Robust to outliers.

- Handles negative values effectively.

Disadvantages:

- Alters the original distribution of the data.

- May not be suitable for all algorithms, especially those sensitive to data distribution.

3. Using MinMaxScaler from Scikit-learn:

Scikit-learn, a popular machine learning library in Python, provides a convenient MinMaxScaler class for min-max scaling.

Example:

from sklearn.preprocessing import MinMaxScaler

data = np.array([10, 20, 30, 40, 50]).reshape(-1, 1)

scaler = MinMaxScaler()

normalized_data = scaler.fit_transform(data)

print(normalized_data)Output:

[[0. ]

[0.25]

[0.5 ]

[0.75]

[1. ]]Advantages:

- Easy to use and provides consistent results.

- Offers flexibility with parameters like

feature_rangeto customize the scaling range.

Disadvantages:

- May require additional imports.

4. Using StandardScaler from Scikit-learn:

Similar to MinMaxScaler, Scikit-learn offers a StandardScaler class for standardization.

Example:

from sklearn.preprocessing import StandardScaler

data = np.array([10, 20, 30, 40, 50]).reshape(-1, 1)

scaler = StandardScaler()

normalized_data = scaler.fit_transform(data)

print(normalized_data)Output:

[[-1.26491106]

[-0.63245553]

[ 0. ]

[ 0.63245553]

[ 1.26491106]]Advantages:

- Easy to use and provides consistent results.

- Offers flexibility with parameters like

with_meanandwith_stdto control the scaling process.

Disadvantages:

- May require additional imports.

5. Custom Functions:

You can also define your own custom functions to perform normalization. This allows for greater control and flexibility in handling specific data requirements.

Example:

def custom_normalize(data, min_val=0, max_val=1):

"""

Custom function for normalizing data between 0 and 1.

Args:

data: The data to be normalized.

min_val: The minimum value for the normalized range (default: 0).

max_val: The maximum value for the normalized range (default: 1).

Returns:

The normalized data.

"""

return (data - np.min(data)) / (np.max(data) - np.min(data)) * (max_val - min_val) + min_val

data = np.array([10, 20, 30, 40, 50])

normalized_data = custom_normalize(data)

print(normalized_data)Output:

[0. 0.25 0.5 0.75 1. ]Advantages:

- Provides complete control over the normalization process.

- Allows for customization based on specific data characteristics.

Disadvantages:

- Requires more coding effort.

Choosing the Right Normalization Technique

The choice of normalization technique depends on the specific dataset, the algorithm used, and the desired outcome. Consider the following factors:

- Data Distribution: If the data follows a normal distribution, standardization is generally preferred. For non-normal distributions, min-max scaling might be more appropriate.

- Outliers: Standardization is more robust to outliers than min-max scaling.

- Algorithm Sensitivity: Some algorithms are sensitive to the scale of the data, while others are not. Consult the documentation of the algorithm you are using to determine its sensitivity to scaling.

- Interpretability: Min-max scaling preserves the original distribution, making it easier to interpret the normalized values.

Applications of Mapping Values Between 0 and 1

Mapping values between 0 and 1 finds extensive applications in various domains:

- Machine Learning: Normalization is crucial for improving the performance of many machine learning algorithms, including clustering, classification, and regression.

- Image Processing: Normalization is often applied to images to enhance contrast and improve the performance of image processing algorithms.

- Natural Language Processing: Normalization techniques are used in NLP to represent text data in a numerical format, facilitating tasks like sentiment analysis and text classification.

- Data Visualization: Normalization helps to create more informative and visually appealing visualizations, ensuring that all features are represented on a common scale.

FAQs:

1. Is it always necessary to map values between 0 and 1?

While normalization is often beneficial, it is not always mandatory. Some algorithms, like decision trees, are not sensitive to the scale of the data. Additionally, normalization might not be necessary if the features already have a similar scale.

2. What if my data contains negative values?

Standardization handles negative values effectively, while min-max scaling might require adjustments to ensure the normalized values remain within the desired range.

3. How do I choose the appropriate normalization technique?

Consider the data distribution, presence of outliers, algorithm sensitivity, and the desired level of interpretability when selecting a normalization technique.

4. Can I normalize data with missing values?

Missing values should be handled before normalization. You can either impute missing values or exclude them from the normalization process.

5. Is normalization the same as feature scaling?

Normalization and feature scaling are closely related but not identical. Normalization specifically refers to mapping values to a specific range, usually between 0 and 1. Feature scaling encompasses a broader range of techniques, including normalization, standardization, and other scaling methods.

Tips:

- Experiment: Try different normalization techniques and compare their impact on your algorithm’s performance.

- Consider the Algorithm: Choose a normalization technique that is compatible with the algorithm you are using.

- Handle Outliers: Address outliers before normalization to avoid skewing the scale.

- Document Your Choices: Clearly document the normalization technique used to ensure reproducibility and ease of understanding.

- Use Libraries: Utilize libraries like Scikit-learn for efficient and consistent normalization.

Conclusion:

Mapping values between 0 and 1 in Python is a powerful data preprocessing technique that offers numerous advantages, including enhanced comparability, improved algorithm performance, and clearer visualizations. While multiple methods exist, choosing the appropriate technique depends on the specific data characteristics, algorithm sensitivity, and desired outcome. By understanding the principles and applications of normalization, data scientists and analysts can leverage this technique to effectively prepare and analyze their data, leading to more accurate and insightful results.

Closure

Thus, we hope this article has provided valuable insights into Mapping Values Between 0 and 1 in Python: A Comprehensive Guide. We hope you find this article informative and beneficial. See you in our next article!