Exploring Top K Queries with MapReduce in Python: A Comprehensive Guide

Related Articles: Exploring Top K Queries with MapReduce in Python: A Comprehensive Guide

Introduction

With enthusiasm, let’s navigate through the intriguing topic related to Exploring Top K Queries with MapReduce in Python: A Comprehensive Guide. Let’s weave interesting information and offer fresh perspectives to the readers.

Table of Content

Exploring Top K Queries with MapReduce in Python: A Comprehensive Guide

The ability to efficiently retrieve the top k most frequent elements from a massive dataset is a fundamental requirement in numerous data analysis tasks. This task, commonly known as a "top k query," finds applications in domains ranging from web analytics and search engine optimization to social media trend analysis and scientific data exploration.

While traditional approaches to solving top k queries may struggle with the scale and complexity of modern datasets, the MapReduce paradigm offers a powerful and scalable solution. This article delves into the intricacies of implementing top k queries using MapReduce in Python, highlighting its strengths and providing a practical implementation.

Understanding the Problem: Top K Queries

A top k query seeks to identify the k most frequent elements within a dataset. For instance, imagine a dataset containing user activity on a website. A top k query could be used to determine the top 10 most visited pages, the top 5 most popular search terms, or the top 3 most active users.

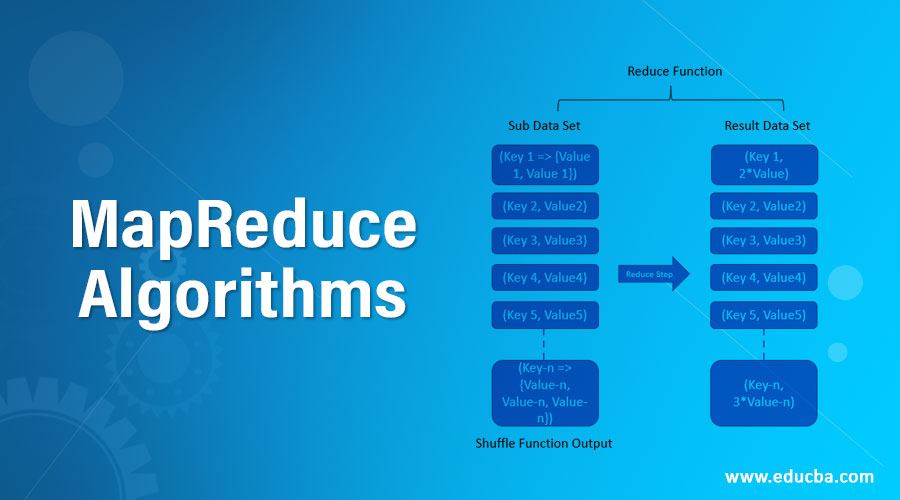

MapReduce: A Scalable Solution

MapReduce is a programming model designed for processing large datasets across distributed computing environments. Its core principle lies in dividing a complex task into smaller, independent subtasks that can be executed concurrently on multiple machines. This parallel processing capability makes MapReduce highly efficient for handling large datasets.

Implementing Top K Queries with MapReduce in Python

To illustrate the implementation of top k queries with MapReduce in Python, consider a dataset containing a list of words. Our goal is to find the top 10 most frequent words in this dataset.

1. Map Function:

The map function iterates through the dataset, processing each element individually. In this case, it counts the occurrences of each word:

def mapper(key, value):

for word in value.split():

yield word, 1The mapper takes a key-value pair as input. The key can be a unique identifier for each data point, and the value is the actual data itself. In this example, the input is a line of text, and the mapper function splits the line into individual words. For each word, it emits a key-value pair where the key is the word and the value is 1, representing a single occurrence.

2. Reduce Function:

The reduce function aggregates the results from the map phase. It groups together all values associated with the same key (word) and sums them to obtain the total count for each word:

def reducer(key, values):

total_count = sum(values)

yield key, total_countThe reducer takes a key and an iterable of values as input. It iterates through the values, sums them up, and emits a key-value pair representing the final count for the key.

3. Combining the Map and Reduce Functions:

To complete the top k query, we need to combine the map and reduce functions into a single workflow. We can leverage the mrjob library, a popular framework for running MapReduce jobs in Python:

from mrjob.job import MRJob

class TopKWordCount(MRJob):

def mapper(self, _, line):

for word in line.split():

yield word, 1

def reducer(self, key, values):

total_count = sum(values)

yield key, total_count

def steps(self):

return [

self.mr(mapper=self.mapper, reducer=self.reducer)

]

if __name__ == '__main__':

TopKWordCount.run()This code defines a MapReduce job named TopKWordCount. It defines the mapper and reducer functions as described earlier. The steps function defines the workflow of the job, specifying the map and reduce phases. Finally, the run() function executes the job.

4. Sorting and Selecting Top K:

The output of the MapReduce job is a list of key-value pairs, where the key is the word and the value is its count. To obtain the top k most frequent words, we need to sort this list based on the count in descending order and select the first k elements:

import operator

# Output of MapReduce job: [(word1, count1), (word2, count2), ...]

sorted_word_counts = sorted(output, key=operator.itemgetter(1), reverse=True)

top_k_words = sorted_word_counts[:k]This code uses the operator.itemgetter function to sort the output list based on the second element (count) in descending order. Then, it selects the first k elements from the sorted list to obtain the top k most frequent words.

Benefits of MapReduce for Top K Queries:

- Scalability: MapReduce leverages parallel processing, allowing it to handle massive datasets efficiently by distributing the workload across multiple machines.

- Fault Tolerance: MapReduce is designed to be fault-tolerant. If a machine fails during processing, the framework can automatically re-assign the tasks to other available machines, ensuring the job completes successfully.

-

Ease of Implementation: Frameworks like

mrjobsimplify the implementation of MapReduce jobs in Python, providing a high-level abstraction over the underlying distributed computing infrastructure.

FAQs about Top K Queries with MapReduce in Python:

Q: How does MapReduce handle data partitioning?

A: MapReduce automatically partitions the input data into smaller chunks, which are then processed by individual mapper tasks. The framework ensures that data related to the same key is processed by the same reducer task, ensuring efficient aggregation.

Q: What are the limitations of using MapReduce for top k queries?

A: While MapReduce is highly scalable, it can be less efficient for smaller datasets, where the overhead of setting up the distributed computing environment may outweigh the benefits of parallel processing. Additionally, the framework might not be the most suitable choice for real-time analysis where low latency is critical.

Q: Can I use other Python libraries for MapReduce besides mrjob?

A: Yes, other popular libraries like Hadoop Streaming and Spark can be used to implement MapReduce jobs in Python. These libraries offer different features and functionalities, so choosing the right one depends on your specific needs and the available infrastructure.

Tips for Implementing Top K Queries with MapReduce in Python:

- Optimize the Map Function: Ensure the map function is efficient by minimizing unnecessary operations and using appropriate data structures.

- Choose the Right Reducer: Select a reducer that effectively aggregates the data based on the specific requirements of the top k query.

- Utilize Data Partitioning: Leverage the data partitioning capabilities of the MapReduce framework to distribute the workload effectively and reduce communication overhead.

- Consider Data Skewness: If the dataset exhibits significant data skewness, where some keys have significantly higher frequencies than others, consider implementing strategies to handle the imbalance.

Conclusion

Implementing top k queries with MapReduce in Python offers a powerful and scalable solution for processing massive datasets. The framework’s ability to distribute the workload across multiple machines, its fault tolerance, and its ease of implementation make it an ideal choice for handling large-scale data analysis tasks. By understanding the core concepts of MapReduce and leveraging libraries like mrjob, developers can efficiently extract valuable insights from massive datasets, unlocking new possibilities for data-driven decision-making.

Closure

Thus, we hope this article has provided valuable insights into Exploring Top K Queries with MapReduce in Python: A Comprehensive Guide. We thank you for taking the time to read this article. See you in our next article!